Soham Bot: Automating job search with Handinger and n8n

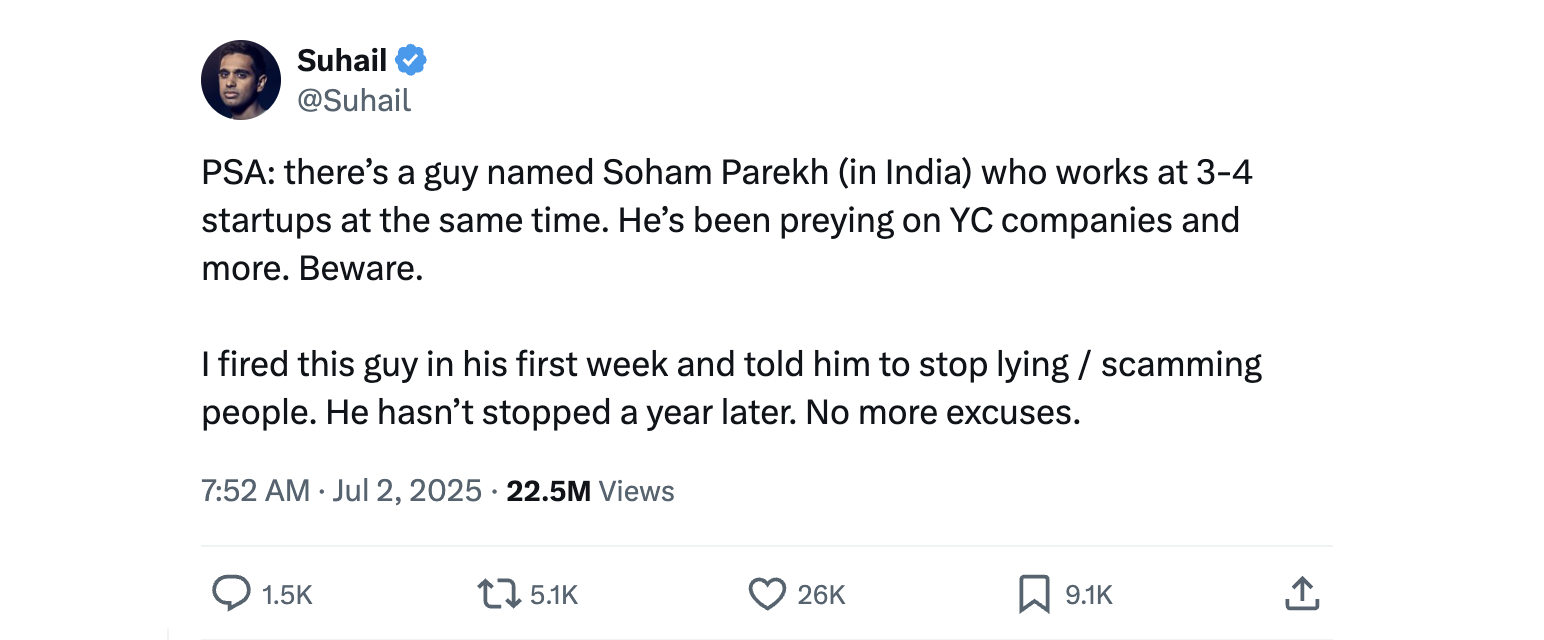

Unless you’ve been living under a rock, you’ve probably heard about Soham Parekh, a guy who, despite the current tech industry downturn and widespread “return to office” mandates, managed to simultaneously work at several high-profile companies.

Every tech CEO has spent the week smugly saying “I told you” to their remote-friendly CTO. Meanwhile, every CTO has searched “Soham” in their inbox and ATS. It’s chaos out there, but also weirdly satisfying.

But hey, if you’re struggling to land a job, you might find a little inspiration in this story. “What would “Soham” do?”, you might ask yourself after receiving another job application rejection. Well, he is reportedy a good engineer, and good engineers automate.

Automating your job search

This is a lightweight introduction to n8n, a tool for building automations and AI agents. We’ll use n8n alongside Handinger to extract structured job offer data from websites.

We’ll focus specifically on sourcing job listings, a repetitive and tedious task: You typically have to scan multiple portals, read through each offer, and determine if it’s a match. One would rather spend that time crafting a compelling, obviously-human cover letter.

Here’s what we’ll automate:

- Set up a daily scheduler

- Fetch job listings from Remote OK

- Fetch job listings from We Work Remotely

- Craft and send an email with the job listings

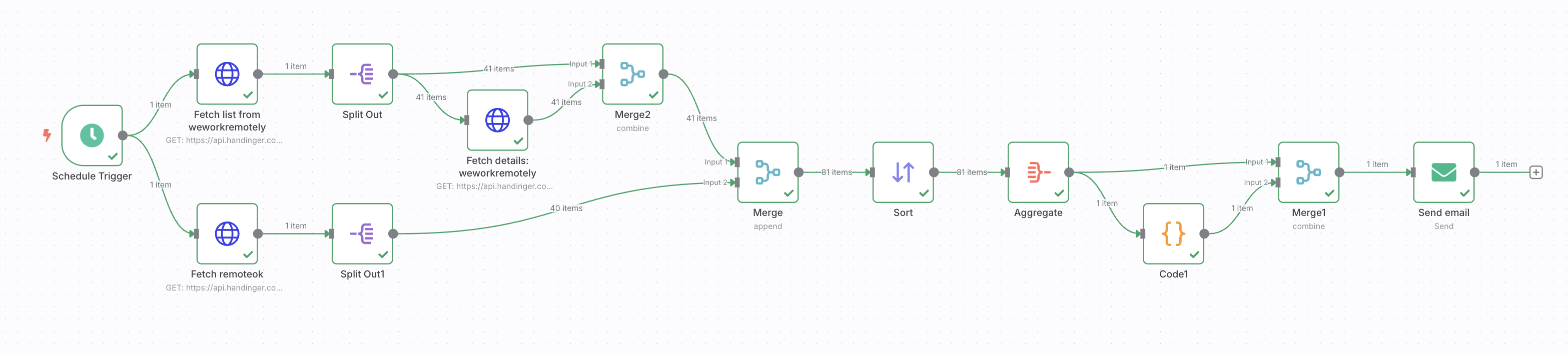

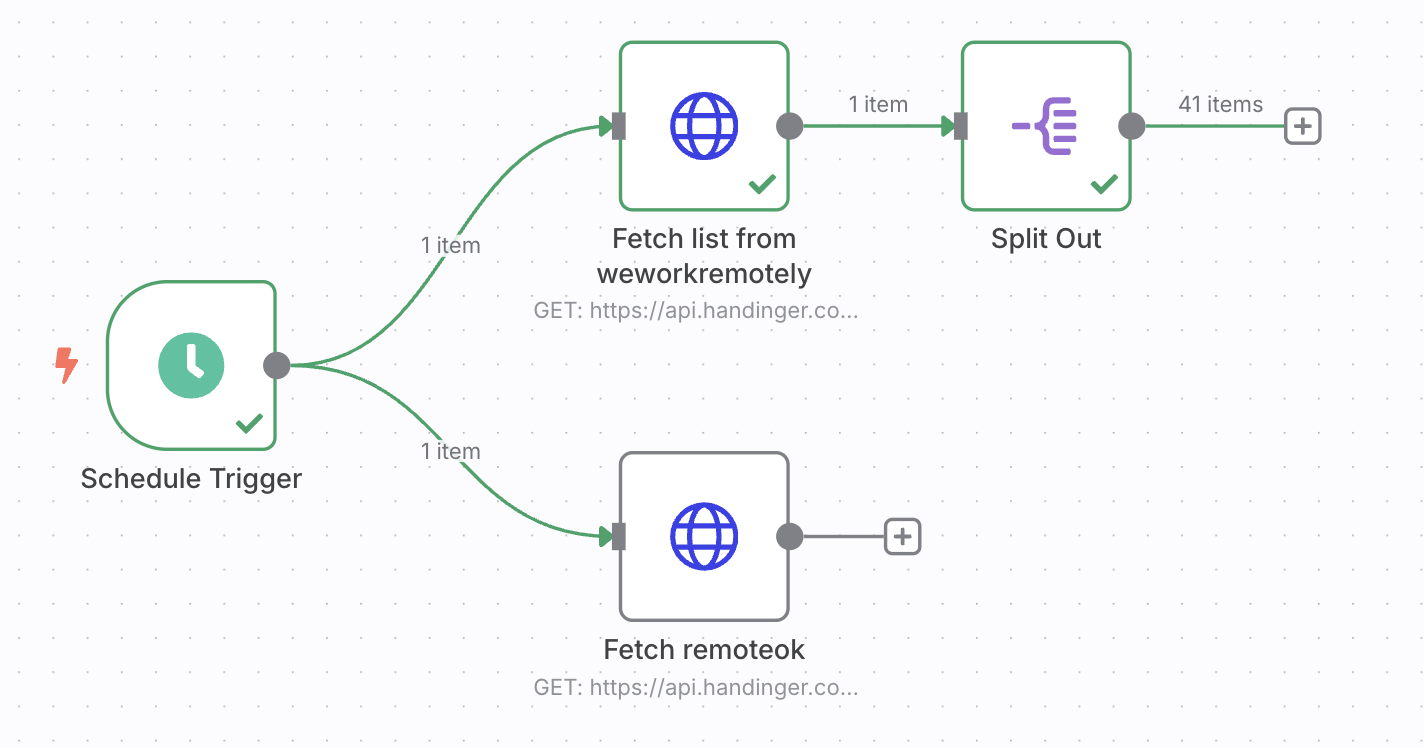

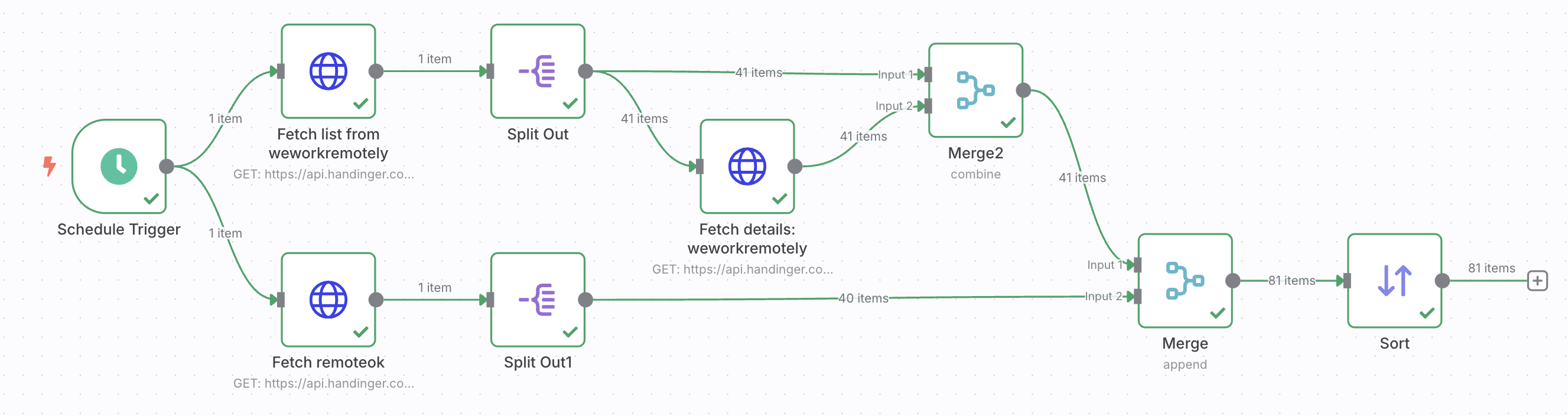

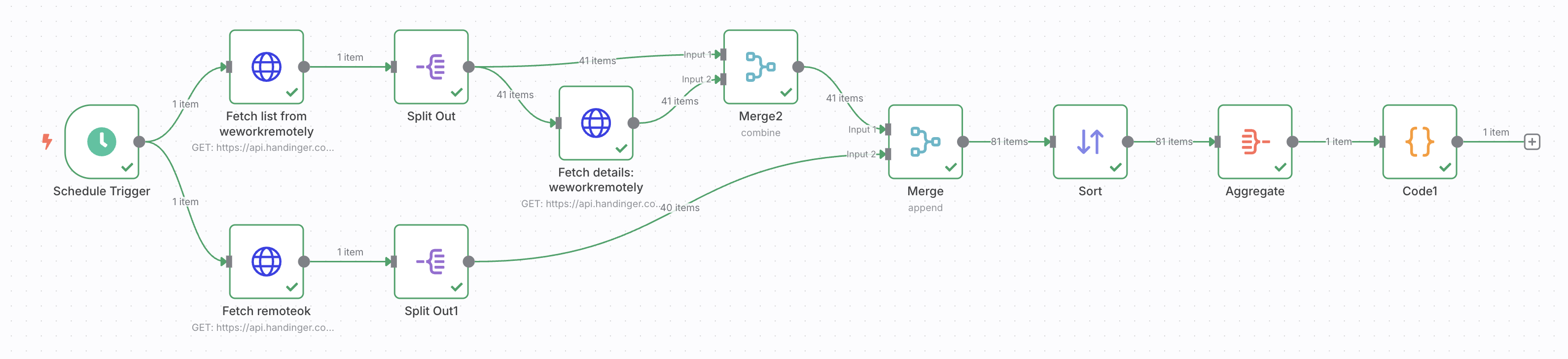

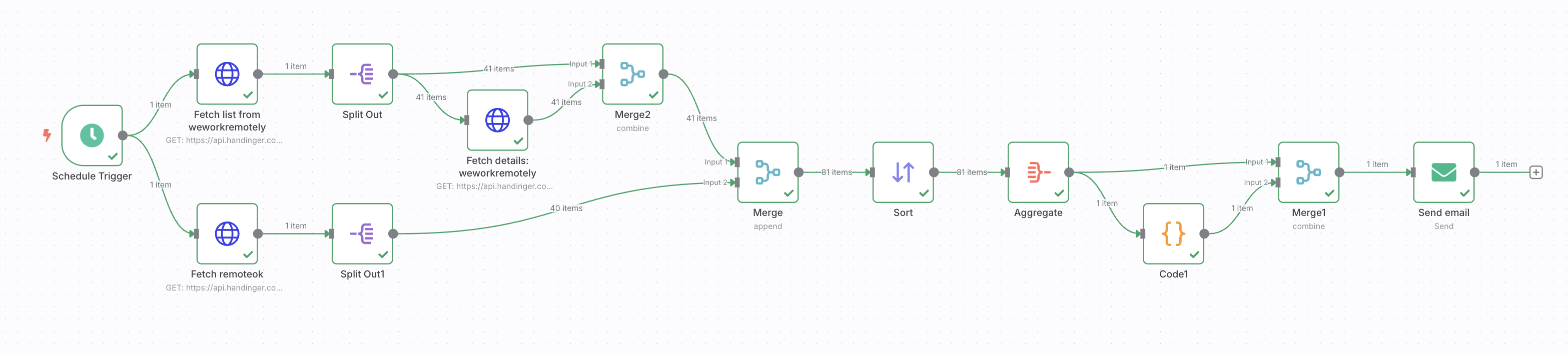

The finished workflow will look like this:

Here is the full workflow gist. You can import it into n8n to follow along this tutorial, but remember to replace the Handinger API key with your own.

Sounds good? Let’s get started!

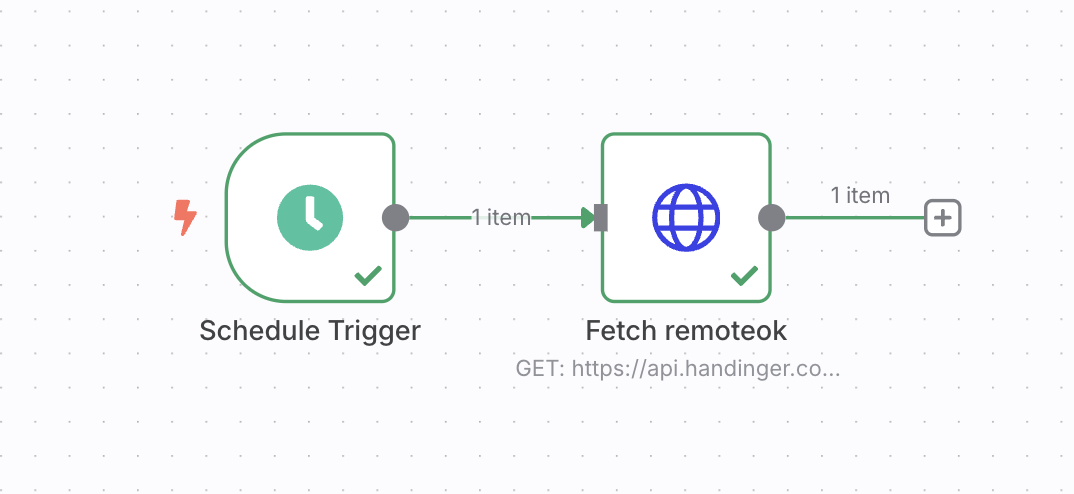

Step 1: Setup a daily scheduler

In n8n, workflows are built from nodes. Each node has inputs and outputs, and you connect them with arrows. Simple and intuitive.

Every workflow needs a Trigger node. You can use manual triggers, webhooks, or listen to app events. For our case, we’ll use a scheduler trigger to run the workflow once a day.

Step 2: Fetch job listings from Remote OK

We will start with Remote Ok. A well known remote job portal by Pieter Levels. Instead of scraping the homepage, we will use the get_jobs endpoint, it’s faster and more reliable.

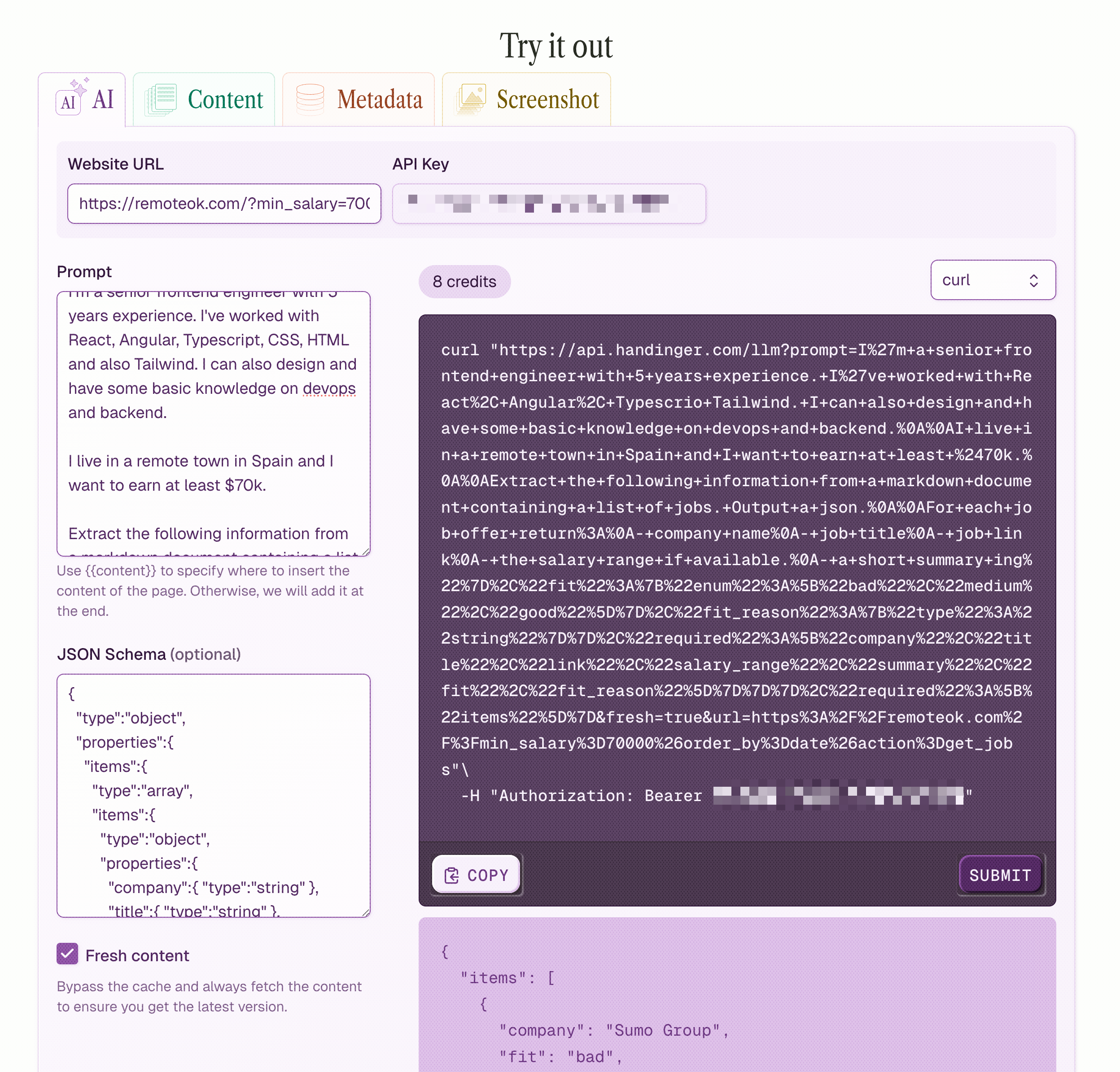

Log into Handinger and experiment with prompts. Once satisfied, use a json schema generator to define the structure of your response. This will ensure that Hadinger will always return the attributes you want.

Note: Handinger caches pages for 2 weeks. Use the Fresh Content flag to disable the cache. During testing, you can disable it for faster, free responses.

For Remote Ok, this endpoint already contains all the information we want, so we can extract the information easily with this prompt.

I'm a senior frontend engineer with 5 years of experience. I've worked with React, Angular, TypeScript, CSS, HTML, and Tailwind. I can design and have basic knowledge of DevOps and backend development.

I live in a remote town in Spain and want to earn at least $70k.

Extract the following from a markdown list of jobs. Return a JSON:

For each job offer return:- company name- job title- job link- the salary range if available.- a short summary of the job offer- fit: do the technologies, the experience, the salary, and the location approximately match? Can it be "bad", "medium", "good"- fit reason: Explanation of the fit

Content:(Handinger will append the job listing after Content:. Use {{content}} if needed elsewhere in the prompt.)

The schema:

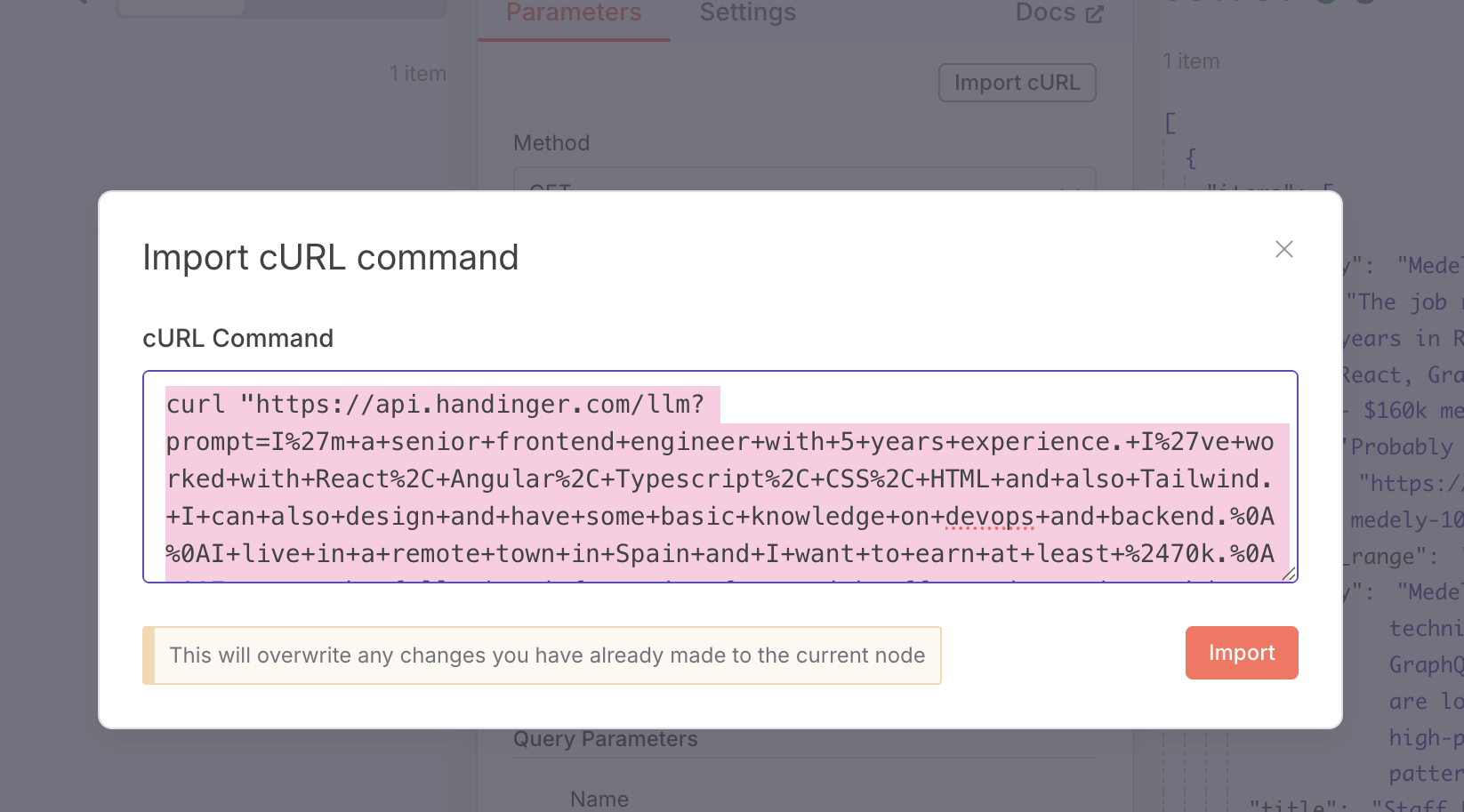

{ "type":"object", "properties":{ "items":{ "type":"array", "items":{ "type":"object", "properties":{ "company":{ "type":"string" }, "title":{ "type":"string" }, "link": {"type": "string"}, "salary_range":{ "type":"string" }, "summary":{ "type":"string" }, "fit":{ "enum": ["bad", "medium", "good"] }, "fit_reason":{ "type":"string" } }, "required":[ "company", "title", "link", "salary_range", "summary", "fit", "fit_reason" ] } } }, "required":[ "items" ]}Once ready, switch to curl view and copy the snippet. In n8n, add an HTTP Request node and click on the Import from curl button. Paste the snippet and execute the step.

Boom. It works. You’re officially Soham-ing the job market.

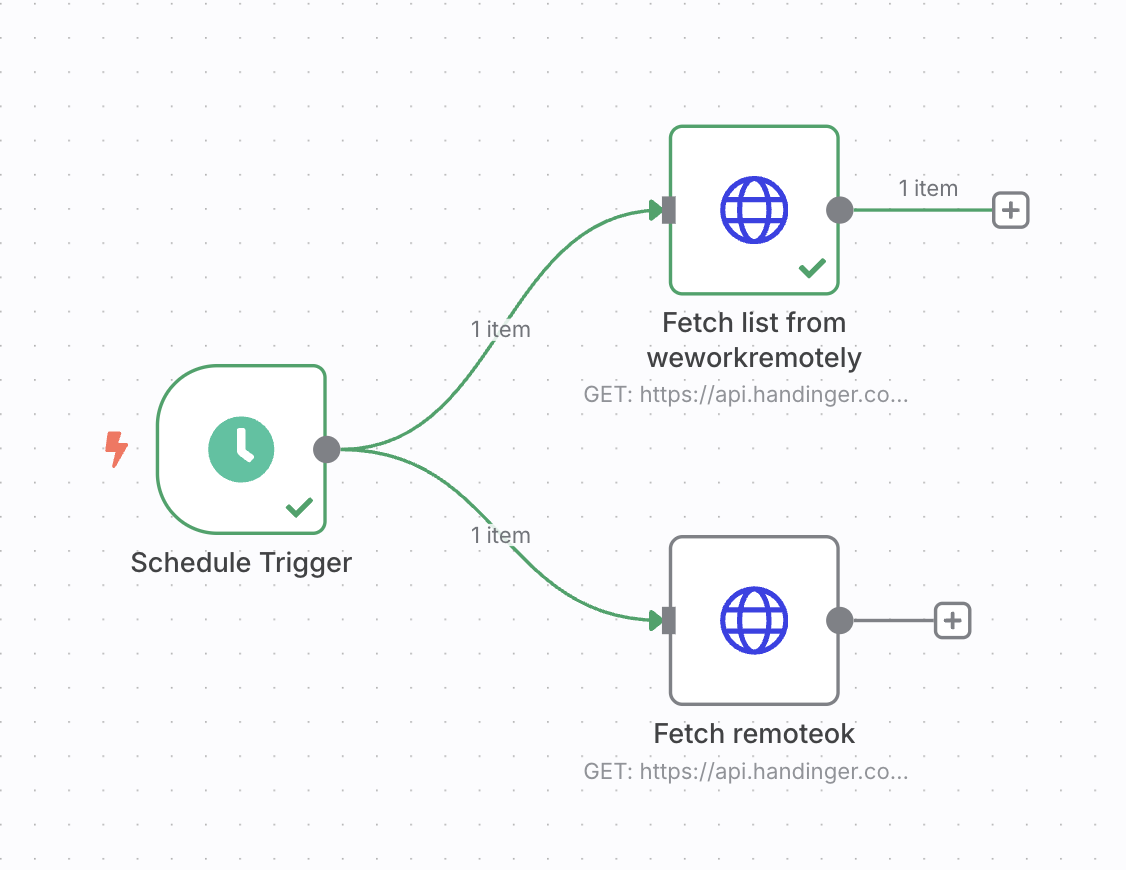

Step 3: Fetch from We Work Remotely

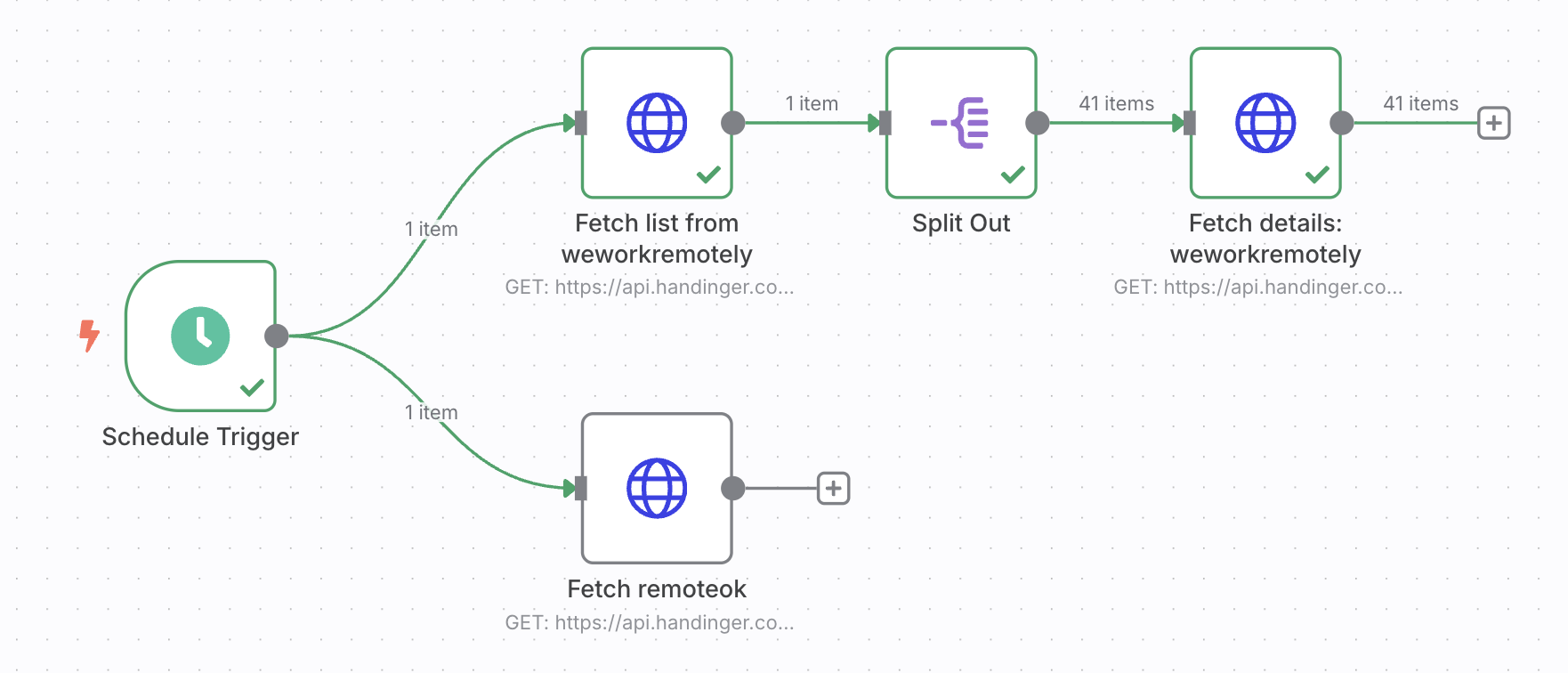

Next, we’ll do something similar with We Work Remotely, another popular job board. However, its listings page doesn’t include as much detail up front, so we’ll need to visit each individual job page to extract full information, a common pattern in web scraping. Thankfully, n8n makes this straightforward.

Start by using Handinger to extract the list of job links from https://weworkremotely.com/categories/remote-full-stack-programming-jobs. Use the following prompt:

Given a markdown with a list of job offers.For each job, extract the job offer link.

Content:The JSON Schema for this prompt is the following:

{ "type":"object", "properties":{ "link":{ "type":"array", "items":{ "type":"string" } } }}Create a new HTTP Request node in n8n, import your Handinger curl snippet, and verify that the output includes the links.

So far, so good.

Now, let’s split that array of links into individual items so we can fetch them in parallel. n8n operates on items, each node processes one item at a time, so if you pass a single array to the next node, it only runs once. We’ll use the Split Out node to fan out the array into separate items.

Each item (a job link) will then be fed into a second HTTP Request node. Use this prompt in Handinger to extract details from each job page:

I'm a senior frontend engineer with 5 years of experience. I've worked with React, Angular, TypeScript, CSS, HTML, and Tailwind. I can design and have basic knowledge of DevOps and backend development.

I live in a remote town in Spain and want to earn at least $70k.

Extract the following from a markdown list of jobs. Return a JSON:- company name- job title- the salary range if available.- a short summary of the job offer- fit: do the technologies, the experience, the salary, and the location approximately match? Can it be "bad", "medium", "good"- fit reason: Explanation of the fit

Content:And the schema:

{ "type": "object", "properties": { "company": { "type": "string" }, "title": { "type": "string" }, "salary_range": { "type": "string" }, "summary": { "type": "string" }, "fit": { "enum": [ "bad", "medium", "good" ] }, "fit_reason": { "type": "string" } }, "required": [ "company", "title", "salary_range", "summary", "fit", "fit_reason" ]}

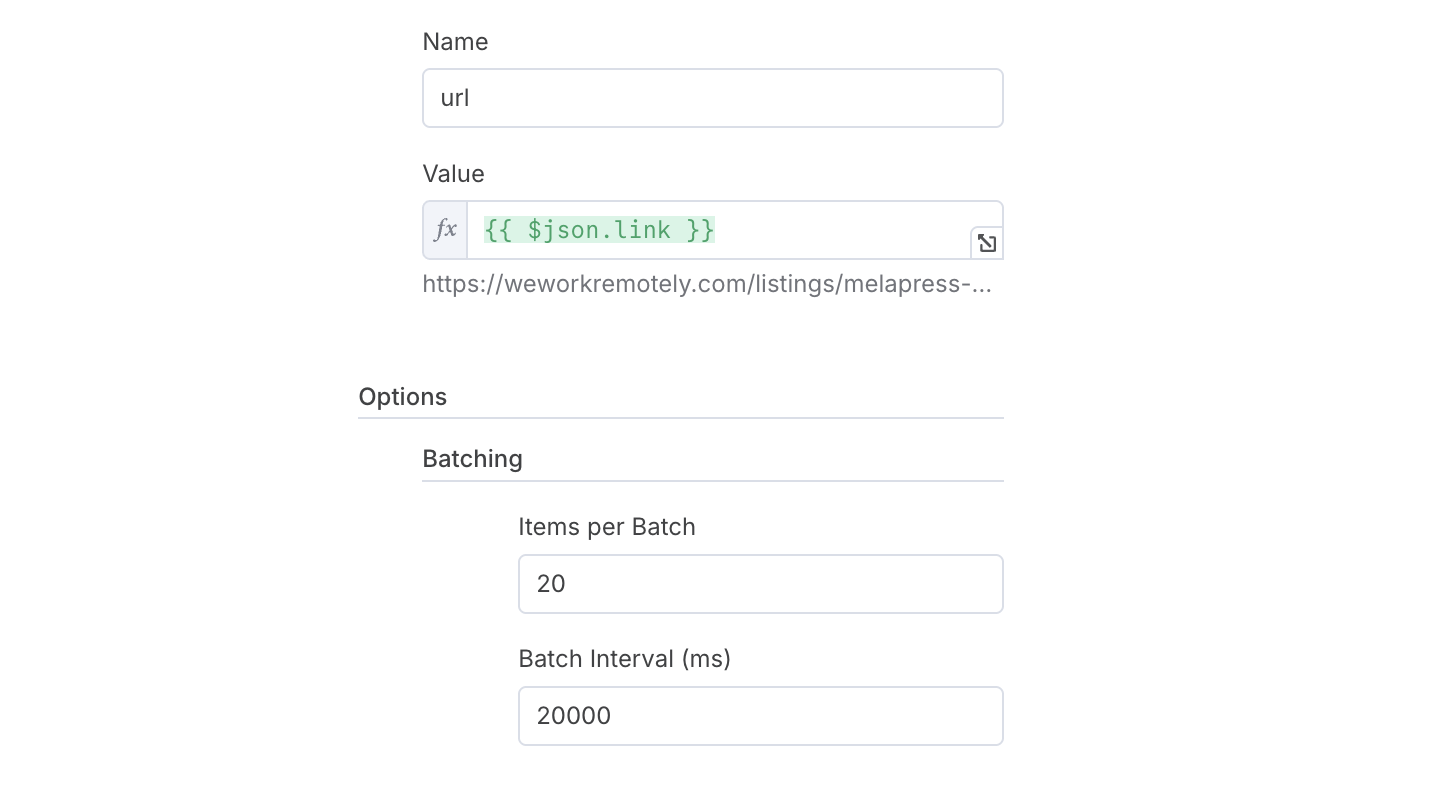

In the second HTTP Request node, set the URL to {{ $json.link }} so each request targets a different job page.

To prevent being rate limited (HTTP 429 errors), use batching. A batch size of 20 and a timeout of 20,000 milliseconds (20s) should be safe.

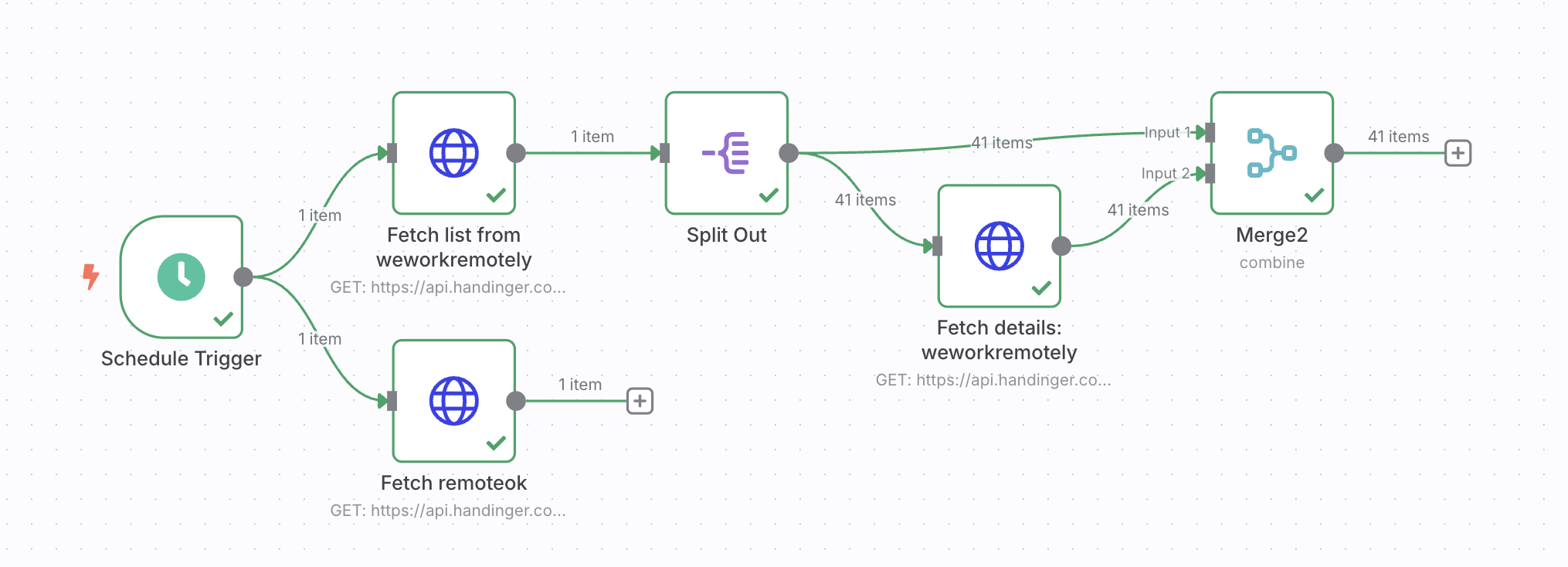

Once all job details are fetched, use the Merge node to combine the link data with the extracted content. Use the “Position” option because after splitting the array of links and processing each one with an HTTP Request, the outputs are still in the same order as the input list. So the first link corresponds to the first extracted job offer, the second link to the second job offer, and so on.

Step 4: Craft and send an email with the job listings

At this point, we have two sets of job offers—one from Remote OK and one from We Work Remotely. To combine them, we’ll use another Merge node, this time set to Append. This just concatenates all items into a single list.

Next, we want to sort the job offers by how well they match our profile (fit). Since fit uses a custom order (“good”, “medium”, “bad”), we’ll add a Sort node with a custom compare function:

const fieldName = 'fit';const priority = { good: 0, medium: 1, bad: 2 };

if (priority[a.json[fieldName]] < priority[b.json[fieldName]]) { return -1;}

if (priority[a.json[fieldName]] > priority[b.json[fieldName]]) { return 1;}return 0;

Once sorted, use the Aggregate node, which is the opposite of Split Out, to collapse all job offer items into a single item again.

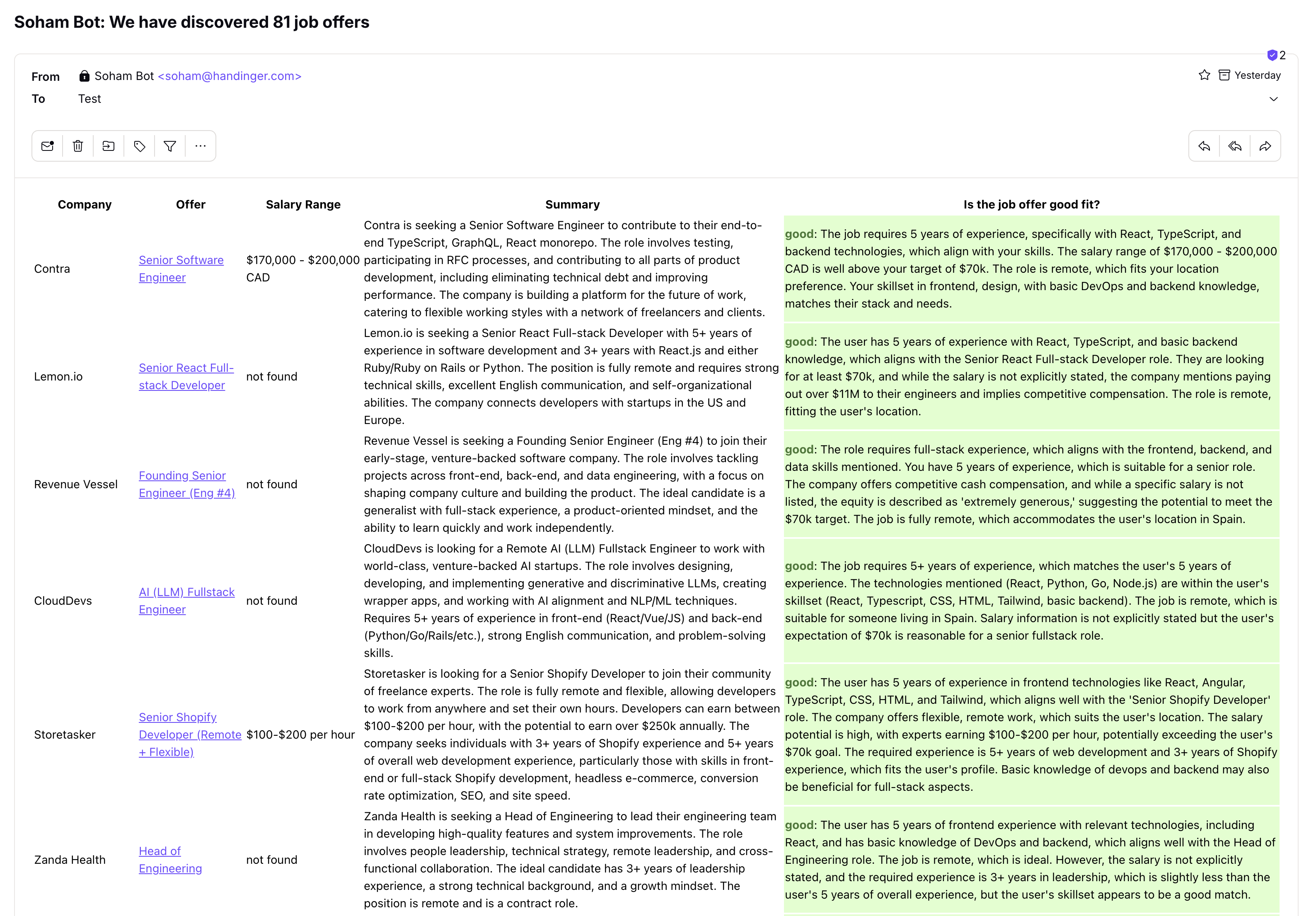

We’ll now generate an HTML email using a Code node. Here’s a code snippet that converts the offers into a color-coded HTML table:

const items = $input.all().flatMap((item) => item.json).flatMap(json => json.data);console.log(items)const htmlTable = ` <table> <tr> <th>Company</th> <th>Offer</th> <th>Salary Range</th> <th>Summary</th> <th>Is the job offer good fit?</th> </tr> ${items.map((item) => ` <tr> <td>${item.company}</td> <td><a href="${item.link}">${item.title}</a></td> <td>${item.salary_range}</td> <td>${item.summary}</td> <td style="${ item.fit === 'good' ? 'background: #ddffcc' : item.fit === 'medium' ? 'background: #ffffdd' : 'background: #ffdddd' }" > <strong style="${ item.fit === 'good' ? 'color: #447733' : item.fit === 'medium' ? 'color: #777733' : 'color: #773333' }"> ${item.fit} </strong>: ${item.fit_reason} </td> </tr>`, ) .join("")}</table>`;

return { htmlTable };

To email the result, you’ll need the number of job offers too. Use Merge again (this time to combine the original array and the HTML string), then connect it to a Send Email node.

Example subject:

Soham Bot: We have discovered {{ $json.data.length }} job offersAnd for the body, we just use {{ $json.htmlTable }}.

Configure the email using Gmail or any provider supported by n8n.

That’s it!

Click Execute Workflow, and if everything’s set up properly, you’ll receive a daily email with job offers tailored to your profile, including a clear match score for each one.

Conclusion

We’ve just learned how you can use n8n with Handinger to automate your job search.

n8n makes it very easy to create complex workflows, and with Handinger’s /llm endpoint, you can go beyond extracting data: In our example, we matched our profile against the job offers to get an assessment of whether we are a good fit for each one. This is the kind of problem that was unsolvable 3 years ago!

While we focused on job hunting, these techniques apply to many workflows: content aggregation, lead generation, newsletter creation, and more.

If you build something cool, share it with us. Happy automating!